Getting Started With Apache Spark in Ubuntu

This is the first episode of the Apache Spark series that i wish to continue to up to advance Apache Spark Programming.

What is Apache Spark

Apache Spark is a cluster computing platform. In simpler terms it will execute your instructions in a cluster of computer instead using a single one. By doing so it will produce fast results. Apache spark is most used in Big Data Analysis but it has features which can be used for some other areas such as Machine Learning and Graph Processing. The most satisfying thing about Apache Spark is that it supports Java, Scala and python which are well known languages.

Downloading Apache Spark

Please note this installation walk through is for installing Spark on a single node. Download Apache from here.

Select Apache version and Package type. The package type is important if you have already using Apache Hadoop. Make sure you use the correct package type to avoid any collisions. But please note that Apache Hadoop is NOT a necessary per-requisite to install and use Spark.

Once download is complete you will have a tar file.

Go to the directory where the tar file is. To unpack Spark open up terminal and use following commands us usual.

..$ tar -xf spark-2.1.0-bin-hadoop2.7.tgz

..$ cd spark-2.1.0-bin-hadoop2.7

..$ ls

If all worked out well so far you should have following content in your directory.

bin - Contains the various executable files that we can use to interact with Spark in various ways. In the next step we will use use some of these.

examples - This directory contains some example Standalone Spark jobs that has been already done for you!. You go through this to get more familiar with Spark API.

Getting Started with Spark

As I said before there are few languages that we can use to work with Spark. Here I will use python in my Examples.

Lets start with something like "Hello World" in Spark Universe which is counting words in a document.

Using terminal go to the directory where Spark is.

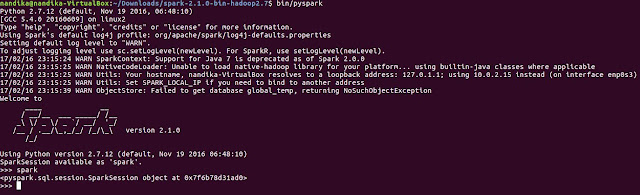

and type bin/pyspark .

Then you will see that Spark is starting as below.

Bravo!. All is set for our first Spark job. You can use CTRL+D to exit this python shell. Only two lines of code is need for our first Spark job!

lines = sc.textFile("README.md")

lines.count()

This simple program will be our Driver Program. The Driver Program will access Spark through a SparkContext object. When you execute these two lines of code. Spark creates a RDD and perform Action (count) on that RDD to produce our result.

We have reached the end. Now you Know how to install Apache Spark and run a simple on that using python.

I will explain SparkContext, RDDs, Actions and some more in our next episode.

Please comment if you have any issues following these steps and feel free to point out errors I've made. Until next time adios amigos!!

Comments